Studying vision and how we process what we see is a popular and accessible way for neuroscientists to study the brain. For a long time, they thought they had some things worked out: vision was guided by a hierarchical system divided into basic low-order tasks, such as orientation, luminance, depth and motion, and high-order tasks that impart meaning and recognition to the things we look at, with different parts of the brain responsible for each. But research by young scientists like Dr Dorita HF Chang of the Department of Psychology is showing that vision processing, like many matters to do with the brain, is a lot more complicated than the early science indicated.

Dr Chang has been focussing on low-order functions in particular. The original thinking was that these were processed almost independently, so if an object moved up or down, the neurons responsible for motion would simply detect the motion and let the regions responsible for high-order tasks deal with identifying the object.

But Dr Chang has found otherwise. In a series of experiments, she has demonstrated that the nature of the objects can alter the sensitivity of neurons, as measured by functional magnetic resonance imaging (fMRI). The more familiar an object is to us, the more sensitive our low-order neurons become to its motion. Moreover, people who are trained to become familiar with a previously-unknown object develop heightened sensitivity to its motion over time.

Put another way, experience affects what was supposed to be a purely biological function. “Ultimately, what we perceive is not just a reconstruction of which neuron is firing, but a product of experience,” she said.

One object that humans are highly experienced in recognising is the human face and Dr Chang used that insight to construct tests that support her point.

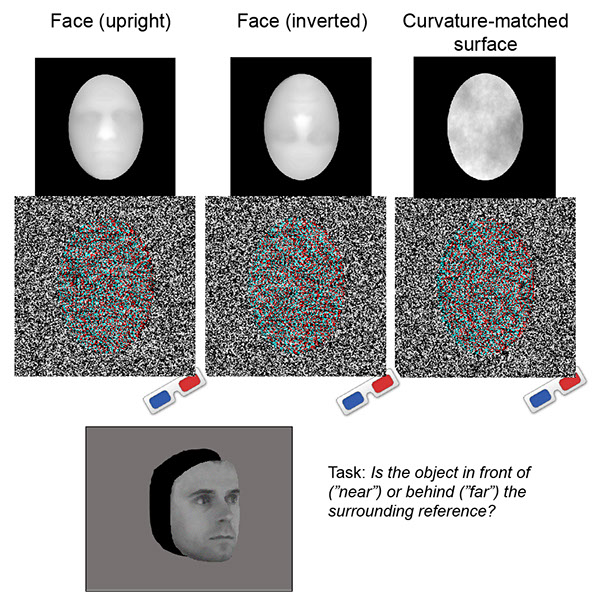

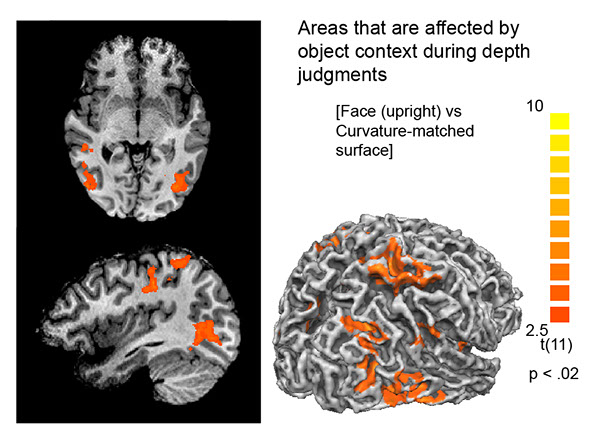

In one line of experiments focussed on 3D vision, subjects were asked to indicate whether an object was in front of or behind a reference point while their brain activity was also monitored with fMRI. The object was a simple surface or a face, and it turned out that not only did the two affect position judgments differentially, but there were also more depth-related neurons activated in the brain in response to faces during the task. An upside-down face was also used but it did not have the same impact on sensitivities as a face with upright orientation.

Advantage of recognition

“The result was surprising because technically, the task did not involve processing what the object was or its orientation,” she said.

“The whole novelty of this research is that the networks we are looking at are not solely modular or standalone. 3D sensitivity would be considered a low-level feature and you would think it should not be influenced by higher-level meaning, but that seems to be happening here.”

Another set of experiments focussed on the luminance (reflection of light) of objects. Participants were asked to indicate which of two objects was more luminous. There were various pairs of objects – patches of colour, upright faces, upside-down faces, faces of a different race than the participant, and faces of a similar race. Previous studies on high-order processing had shown that people are better at processing faces of their own race. It now turns out that this advantage also holds for low-order processing, too.

“The traditional school of thought would have said that race shouldn’t matter because luminance sensitivity is an early critical response and shouldn’t be modulated by meaning. But again, we have found that basic visual responses somehow get modulated by this contextual information,” she said.

Dr Chang has also begun to study how the brain processes sound, to see how visual training affects auditory processing and vice versa – which would lend further support to the notion that the hierarchical, compartmentalised view of the brain needs a rethink.

“We’ve been showing that we really need to consider an interaction between brain regions to support visual functioning, and to extrapolate that to understand interactions between vision and other modalities as well,” she said.

Getting a clearer picture will be a long slow process, given the complexity of the brain and the fact that this line of investigation represents a paradigm shift in our understanding of how the brain functions. “One hundred years from now, we’ll know a lot more,” Dr Chang added.

Sample images shown to participants in the 3D experiments. Faces (upright or inverted) and non-face surfaces were depicted in terms of special 3D stimuli called stereograms. These stereograms provide separate images to the left and right eyes when special glasses are worn. The resulting percept is of an object in 3D, the position (in depth) of which was required to be judged by the observer in these experiments.

fMRI results from the 3D experiments superimposed on the brain of a representative participant. Depth judgments don’t require explicit identification of object content. Yet, human observers’ behavioural sensitivity to judging the position of objects along the depth plane is significantly altered by object meaning. Corresponding context-based modulations of depth responses are found along intermediate (V3), dorsal (parietal), and ventral (inferotemporal) cortex.

VISION CHALLENGED

How we see objects in the world may depend on what we are looking at. This finding is upending our understanding of the workings of the brain.

![]() Ultimately, what we perceive is not just a reconstruction of which neuron is firing, but a product of experience.

Ultimately, what we perceive is not just a reconstruction of which neuron is firing, but a product of experience. ![]()

Dr Dorita HF Chang

Back

Next

_01.png?crc=4174553115)

_12.png?crc=282960334)